“The Big Page”

A single page containing all the modules

R Basics

R & Statistical Programming

Purpose of statistical programming software

Unlike spreadsheet applications (like Excel) or point-and-click statistical analysis software (SPSS), statistical programming software is based around a script-file where the user writes a series of commands to be performed,

Advantages of statistical programming software

Data analysis process is reproducible and transparent.

Due to the open-ended nature of language-based programming, there is far more versatility and customizability in what you can do with data.

Typically statistical programming software has a much more comprehensive range of built-in analysis functions than spreadsheets etc.

Characteristics of R

R is an open-source language specifically designed for statistical computing (and it’s the most popular choice among statisticians)

Because of its popularity and open-source nature, the R community’s package development means it has the most prewritten functionality of any data analysis software.

Differs from software like Stata, however, in that while you can use prewritten functions, it is equally adept at programming solutions for yourself.

Because it’s usage is broader, R also has a steeper learning curve than Stata.

Comparison to other statistical programming software

- Stata: The traditional choice of (academic) economists.

- Stata is more specifically econometrics focused and is much more command-oriented. Easier to use for standard applications, but if there’s not a Stata command for what you want to do, it’s harder to write something yourself.

- Stata is also very different than R in that you can only ever work with one dataset at a time, while in R, it’s typical to have a number of data objects in the environment.

- Stata is more specifically econometrics focused and is much more command-oriented. Easier to use for standard applications, but if there’s not a Stata command for what you want to do, it’s harder to write something yourself.

SAS: Similar to Stata, but more commonly used in business & the private sector, in part because it’s typically more convenient for massive datasets. Otherwise, I think it’s seen as a bit older and less user-friendly.

Python: Another option based more on programming from scratch and with less prewritten commands. Python isn’t specific to math & statistics, but instead is a general programming language used across a range of fields.

Probably the most similar software choice to R at this point, with better general use (and programming ease) at the cost of less package development specific to econometrics/data analysis.Matlab: Popular in macroeconomics and theory work, not so much in empirical work. Matlab is powerful, but is much more based on programming “from scratch” using matrices and mathematical expressions.

Useful resources for learning R

- DataCamp: interactive online lessons in R.

- Some of the courses are free (particularly community-written lessons like the one you’ll do today), but for paid courses, DataCamp costs about 300 SEK / mo.

RStudio Cheat Sheets: Very helpful 1-2 page overviews of common tasks and packages in R.

Quick-R: Website with short example-driven overviews of R functionality.

StackOverflow: Part of the Stack Exchange network, StackOverflow is a Q&A community website for people who work in programming. Tons of incredibly good R users and developers interact on StackExchange, so it’s a great place to search for answers to your questions.

R-Bloggers: Blog aggregagator for posts about R. Great place to learn really cool things you can do in R.

R for Data Science: Online version of the book by Hadley Wickham, who has written many of the best packages for R, including the Tidyverse, which we will cover.

Getting Started in R

RStudio GUI

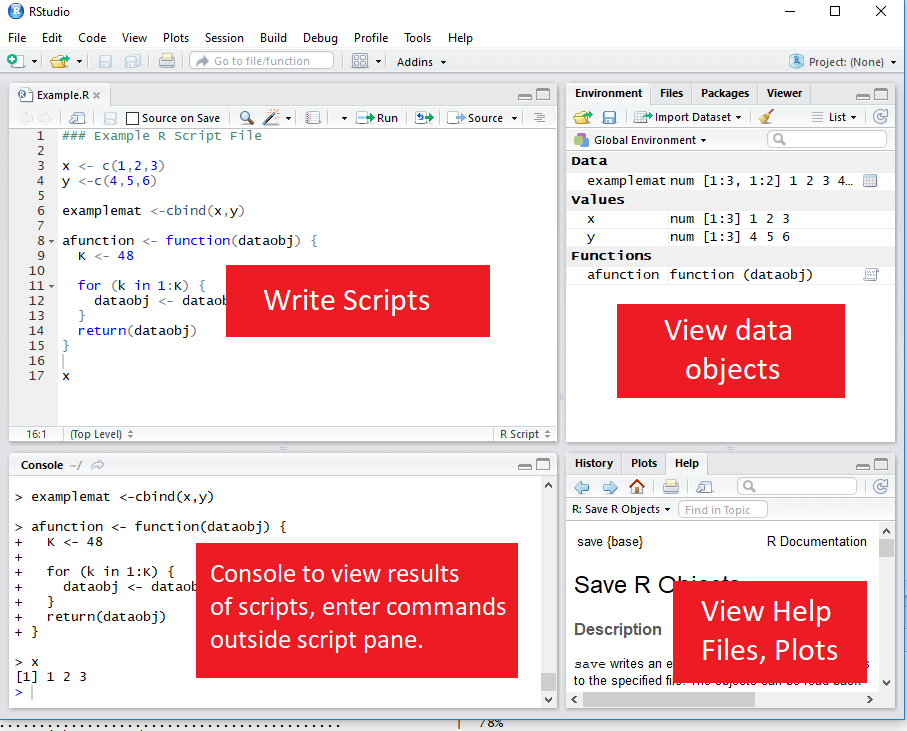

RStudio is an is an integrated development environment (IDE).

This means that in addition to a script editor, it also let’s you view your environments, data objects, plots, help files, etc directly within the application.

Executing code from the script

To execute a section of code, highlight the code and click “Run” or use Ctrl-Enter.

For a single line of code, you don’t need to highlight, just click into that line.

To execute the whole document, the hotkey is Ctrl-Shift-Enter.

Style advise

Unlike Stata, with R you don’t need any special code to write multiline code - it’s already the default (functions are written with parentheses, so its clear when the line actually ends.)

So there’s no excuse for really long lines. Accepted style suggests using a 80-character limit for your lines.

- RStudio has the option to show a guideline for margins. Use it!

- Go to Tools -> Global Options -> Code -> Display, then select Show Margin and enter 80 characters.

You can also write multiple expressions on the same line by using ; as a manual line break.

Help files in R

You can access the help file for any given function using the help function. You can call it a few different ways:

- In the console, use help()

- In the console, use ? immediately followed by the name of the function (no space inbetween)

- In the Help pane, search for the function in question.

? is shorter, so that’s the most frequent method.

# Help on the lm (linear regression) function

?lmSetting the working directory

To set the working directory, use the function setwd(). The argument for the function is simply the path to the working directory, in quotes.

However: be sure that the slashes in the path are forward slashes (/). For Windows, this is not the case if you copy the path from File Explorer so you’ll need to change them.

# Set Working Directory

setwd("C:/Users/Andrew/Documents/MyProject")

Data Types & Operations

Math operations in R

Examples of basic mathematical operations in R:

# Addition and Subtraction

2 + 2## [1] 4# Multiplication and Division

2*2 + 2/2## [1] 5# Exponentiation and Logarithms

2^2 + log(2)## [1] 4.693147Logical operations in R

You can also evaluate logical expressions in R

## Less than

5 <= 6## [1] TRUE## Greater than or equal to

5 >= 6## [1] FALSE## Equality

5 == 6## [1] FALSE## Negatiion

5 != 6## [1] TRUEYou can also use AND ( &) and OR ( |) operation with logical expressions:

## Is 5 equal to 5 OR 5 is equal to 6

(5 == 5) | (5 == 6)## [1] TRUE## 5 less 6 AND 6 < 5

(5 < 6) & (7 < 6)## [1] FALSEDefining an object

To define an object, use <-. For example

# Assign 2 + 2 to the variable x

x <- 2 + 2Note: In R, there is no distinction between defining and redefining an object (a la gen/replace in Stata).

y <- 4 # Define y

y <- y^2 # Redefine y

y #Print y ## [1] 16Data classes

Data elements in R are categorized into a few seperate classes (ie types)

numeric: Data that should be interpreted as a number.

logical: Data that should be interpreted as a logical statment, ie. TRUE or FALSE.

- character: Strings/text.

- Note, depending on how you format your data, elements that may look like logical or numeric may instead be character.

factor: In affect, a categorical variable. Value may be text, but R interprets the variable as taking on one of limited number of possible values (e.g. sex, municipality, industry etc)

What’s the object class?

a <- 2; class(a)## [1] "numeric"b <- "2"; class(b)## [1] "character"c <- TRUE; class(c)## [1] "logical"d <- "True"; class(d)## [1] "character"

Vectors & Matrices

Vectors

The basic data structure containing multiple elements in R is the vector.

An R vector is much like the typical view of a vector in mathematics, ie it’s basically a 1D array of elements.

Typical vectors are of a single-type (these are called atomic vectors).

A list vector can also have elements of different types.

Creating vectors

To create a vector, use the function c().

# Create `days` vectors

days <- c("Mon","Tues","Wed",

"Thurs", "Fri")

# Create `temps` vectors

temps <- c(13,18,17,20,21)

# Display `temps` vector

temps## [1] 13 18 17 20 21Naming vectors

You can name a vector by assigning a vector of names to c(), where the vector to be named goes in the parentheses.

# Assign `days` as names for `temps` vector

names(temps) <- days

# Display `temps` vector

temps## Mon Tues Wed Thurs Fri

## 13 18 17 20 21Subsetting vectors

There are multiple ways of subsetting data in R. One of the easiest methods for vectors is to put the subset condition in brackets:

# Subset temps greater than or equal to 10

temps[temps>=18]## Tues Thurs Fri

## 18 20 21Operations on vectors

Operations on vectors are element-wise. So if 2 vectors are added together, each element of the \(2^{nd}\) vector would be added to the corresponding element from the \(1^{st}\) vector.

# Temp vector for week 2

temps2 <- c(8,10,10,15,16)

names(temps2) <- days

# Create average temperature by day

avg_temp <- (temps + temps2) / 2

# Display `avg_temp`

avg_temp## Mon Tues Wed Thurs Fri

## 10.5 14.0 13.5 17.5 18.5Matrices

Data in a 2-dimensional structure can be represented in two formats, as a matrix or as a data frame.

- A matrix is used for 2D data structures of a single data type (like atomic vectors).

- Usually, matrices are composed of numeric objects.

To create a matrix, use the matrix() command.

The syntax of ** matrix() ** is:

matrix(x, nrow=a, ncol=b, byrow=FALSE/TRUE)-x is the data that will populate the matrix.

-nrow and ncol specify the number of rows and columns, respectively.

Generally need to specify just 1 since the number of elements and a single condition will determine the other.

-byrow specifies whether to fill in the elements by row or column. The default is byrow=FALSE, ie the data is filled in by column.

Creating a matrix from scratch

A simple example of creating a matrix would be:

matrix(1:6, nrow=2, ncol=3, byrow=FALSE)## [,1] [,2] [,3]

## [1,] 1 3 5

## [2,] 2 4 6Note the difference in appearance if we instead byrow=TRUE

matrix(1:6, nrow=2, ncol=3, byrow=TRUE)## [,1] [,2] [,3]

## [1,] 1 2 3

## [2,] 4 5 6Using the same c() function as in the creation of a vector, we can specify the values of a matrix:

matrix(c(13,18,17,20,21,

8,10,10,15,16),

nrow=2, byrow=TRUE)## [,1] [,2] [,3] [,4] [,5]

## [1,] 13 18 17 20 21

## [2,] 8 10 10 15 16- Note that the line breaks in the code are purely for

readability purposes. Unlike Stata, R allows you to break

code over multiple lines without any extra line break syntax.

Creating a matrix from vectors

Instead of entering in the matrix data yourself, you may want to make a matrix from existing data vectors:

# Create temps matrix

temps.matrix <- matrix(c(temps,temps2), nrow=2,

ncol=5, byrow=TRUE)

# Display matrix

temps.matrix## [,1] [,2] [,3] [,4] [,5]

## [1,] 13 18 17 20 21

## [2,] 8 10 10 15 16Naming rows and columns

-Naming rows and columns of a matrix is pretty similar to naming vectors.

-Only here, instead of using names(), we use rownames() and colnames()

# Create temps matrix

rownames(temps.matrix) <- c("Week1", "week2")

colnames(temps.matrix) <- days

# Display matrix

temps.matrix## Mon Tues Wed Thurs Fri

## Week1 13 18 17 20 21

## week2 8 10 10 15 16Matrix operations

In R, matrix multiplication is denoted by %\(*\)%, as in A %\(*\)% B

A * B instead performs element-wise (Hadamard) multiplication of matrices, so that A * B has the entries \(a_1 b_1\), \(a_2 b_2\) etc.

- An important thing to be aware of with R’s A * B notation, however, is that if either of the terms is a 2D vector, the terms of this vector will be distributed elementwise to each colomn of the matrix.

Elementwise operations with a vector and Matrix

vecA; matB## [1] 1 2## [,1] [,2] [,3]

## [1,] 1 2 3

## [2,] 4 5 6vecA * matB## [,1] [,2] [,3]

## [1,] 1 2 3

## [2,] 8 10 12

Data Frames

Creating a data frame

Most of the time you’ll probably be working with datasets that are recognized as data frames when imported into R.

But you can also easily create your own data frames.

This might be as simple as converting a matrix to a data frame:

mydf <- as.data.frame(matB)

mydf## V1 V2 V3

## 1 1 2 3

## 2 4 5 6- Another way of creating a data frame is to combine other vectors or matrices (of the same length) together.

mydf <- data.frame(vecA,matB)

mydf## vecA X1 X2 X3

## 1 1 1 2 3

## 2 2 4 5 6Defining a column of a data frame (or other 2D object):

Once you have a multidimensional data object, you will usually want to create or manipulate particular columns of the object.

The default way of invoking a named column in R is by appending a dollar sign and the column name to the data object.

Example of adding a new column to a data frame

wages # View wages data frame## wage schooling sex exper

## 1 134.23058 13 female 8

## 2 249.67744 13 female 11

## 3 53.56478 10 female 11wages$expersq <- wages$exper^2; wages # Add expersq## wage schooling sex exper expersq

## 1 134.23058 13 female 8 64

## 2 249.67744 13 female 11 121

## 3 53.56478 10 female 11 121Viewing the structure of a data frame

Like viewing the class of a homogenous data object, it’s often helpful to view the structure of data frames (or other 2D objects).

- You can easily do this using the ** str() ** function.

# View the structure of the wages data frame

str(wages)## 'data.frame': 3 obs. of 5 variables:

## $ wage : num 134.2 249.7 53.6

## $ schooling: int 13 13 10

## $ sex : Factor w/ 2 levels "female","male": 1 1 1

## $ exper : int 8 11 11

## $ expersq : num 64 121 121Changing the structure of a data frame

A common task is to redefine the classes of columns in a data frame.

Common commands can help you with this when the data is formatted suitably:

as.numeric() will take data that looks like numbers but are formatted as characters/factors and change their formatting to numeric.

as.character() will take data formatted as numbers/factors and change their class to character.

as.factor() will reformat data as factors, taking by default the unique values of each column as the possible factor levels.

More about factors

Although as.factor() will suggest factors from the data, you may want more control over how factors are specified.

With the factor() function, you supply the possible values of the factor and you can also specify ordering of factor values if your data is ordinal.

Example of creating ordered factors

A dataset on number of extramarital affairs from Fair (Econometrica 1977) has the following variables: number of affairs, years married, presence of children, and a self-rated (Likert scale) 1-5 measure of marital happiness.

str(affairs) # view structure## 'data.frame': 3 obs. of 4 variables:

## $ affairs: num 0 0 1

## $ yrsmarr: num 15 1.5 7

## $ child : Factor w/ 2 levels "no","yes": 2 1 2

## $ mrating: int 1 5 3## Format mrating as ordered factor

affairs$mrating <-factor(affairs$mrating,

levels=c(1,2,3,4,5), ordered=TRUE)

str(affairs)## 'data.frame': 3 obs. of 4 variables:

## $ affairs: num 0 0 1

## $ yrsmarr: num 15 1.5 7

## $ child : Factor w/ 2 levels "no","yes": 2 1 2

## $ mrating: Ord.factor w/ 5 levels "1"<"2"<"3"<"4"<..: 1 5 3Note that the marital rating (mrating) initially was stored as an integer, which is incorrect. Using factors preserves the ordering while not asserting a numerical relationship between values.

Selections and subsets in data frames

Similar to subsetting a vector, matrices & data frames can also be subsetted for both rows and columns by placing the selection arguments in brackets after the name of the data object:

Arguments can be:

Row or column numbers (eg mydf[1,3])

Row or column names

Rows (ie observations) that meet a given condition

Example of subsetting a data frame

# Subset of wages df with schooling > 10, exper > 10

wages[(wages$schooling > 10) & (wages$exper > 10),]## wage schooling sex exper expersq

## 2 249.6774 13 female 11 121Notice that the column argument was left empty, so all columns are returned by default.

Data Preparation using the Tidyverse

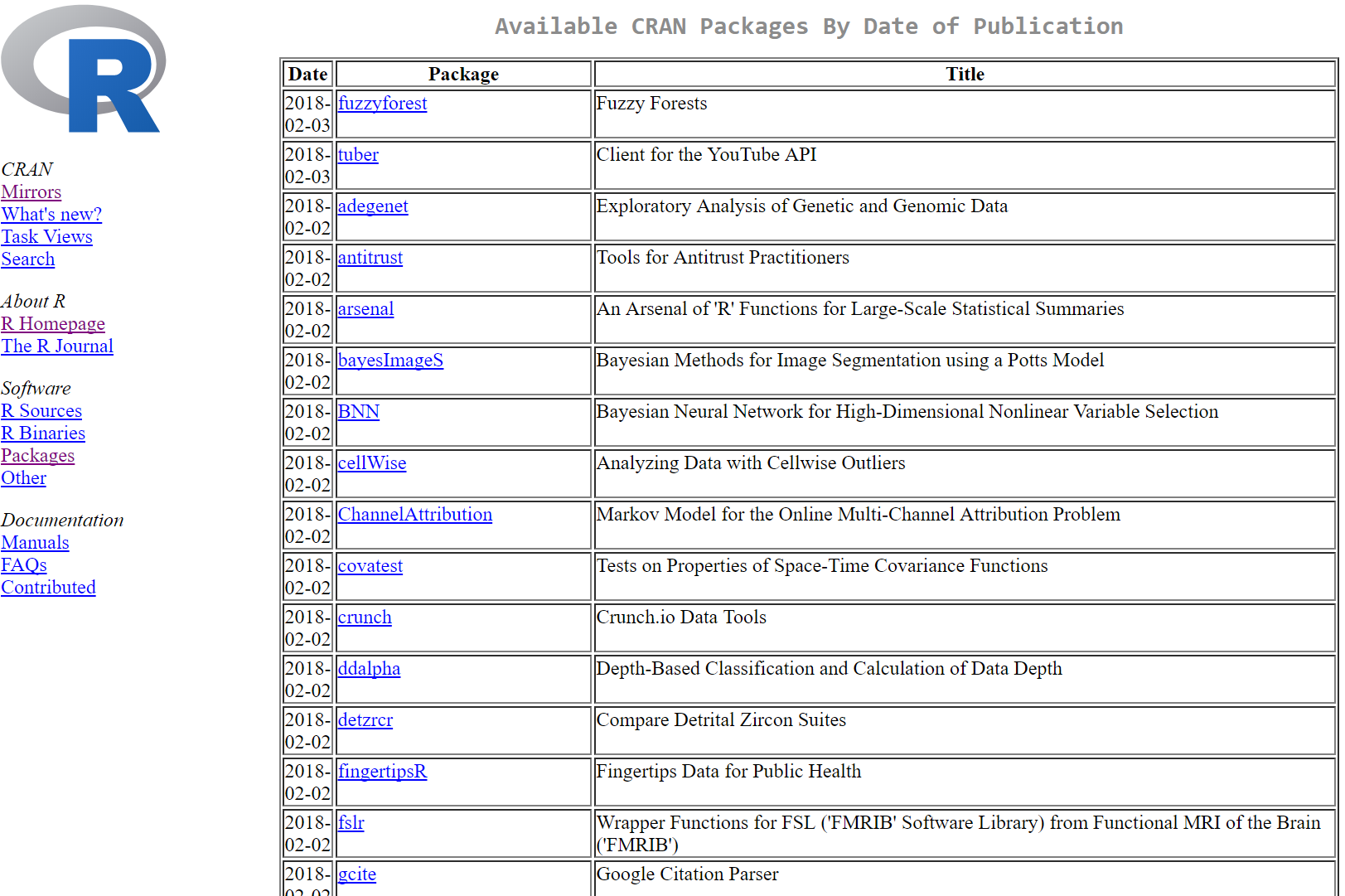

Packages in R

Role of Packages in R

Packages in R are similar to user-written commands (think ssc install) in Stata.

But most things you do in Stata probably use core Stata commands.

In R, most of your analysis will probably be done using packages.

Installing and using a package

To install a package, use the function (preferably in the console) install.packages()

- To begin with, let’s install 2 packages:

install.packages("tidyverse") # Install tidyverse

install.packages("rio") # Install rioLoading a package during analysis

Unlike Stata, in R you need to declare what packages you will be using at the beginning of each R document.

To do this, use the library() function.

-require() also works, but its use is discouraged for this purpose.

library("tidyverse") # Install tidyverse

library("rio") # Install rio

Data Prep Preliminaries

Import and export using rio

Previously, importing and exporting data was a mess, with a lot of different functions for different file formats:

- Stata DTA files alone required two functions: read.dta (for Stata 6-12 DTA files), read.dta13 (for Stata 13 and later files), etc.

The rio package simplifies this by reducing all of this to just one function, import()

- Automatically determines the file format of the file and uses the appropriate function from other packages to load in a file.

PISA_2015 <- import("data/PISA2015.sas7bdat")

PISA_2015[1:5,1:6]## CNTRYID CNT CNTSCHID CYC NatCen Region

## 1 8 ALB 800001 06MS 000800 800

## 2 8 ALB 800002 06MS 000800 800

## 3 8 ALB 800003 06MS 000800 800

## 4 8 ALB 800004 06MS 000800 800

## 5 8 ALB 800005 06MS 000800 800export(PISA_2015, "PISA_2015.rds")Tibbles: an update to the data frame

Last class, we covered data frames—the most basic data object class for data sets with a mix of data class.

Today, we introduce one final data object: the tibble!

The tibble can be thought of as an update to the data frame—and it’s the first part of the tidyverse package that we’ll look at.

Tibble vs data frames

There are three main benefits to the tibble:

- Displaying data frames:

- If you display a data frame, it will print as much as much output as allowed by the “max.print” option in the R environment. With large data sets, that’s far too much. Tibbles by default print the first 10 rows and as many columns as will fit in the window.

- Partial matching in data frames:

- When using the $ method to reference columns of a data frame, partial names will be matched if the reference isn’t exact. This might sound good, but the only real reason for there to be a partial match is a typo, in which case the match might be wrong.

- Tibbles are required for some functions.

Creating or converting to tibbles

The syntax for creating tibbles exactly parallels the syntax for data frames:

tibble() creates a tibble from underlying data or vectors.

as_tibble() coerces an existing data object into a tibble.

PISA_2015 <- as_tibble(PISA_2015); PISA_2015[1:5,1:5]## # A tibble: 5 x 5

## CNTRYID CNT CNTSCHID CYC NatCen

## <dbl> <chr> <dbl> <chr> <chr>

## 1 8 ALB 800001 06MS 000800

## 2 8 ALB 800002 06MS 000800

## 3 8 ALB 800003 06MS 000800

## 4 8 ALB 800004 06MS 000800

## 5 8 ALB 800005 06MS 000800Glimpse

Another tidyverse function that’s very useful is glimpse() , a function very similar to str().

Both functions display information about the structure of a data object.

str() provides more information, such as column (variable) attributes embedded from external data formats, but consequently is much less readable for complex data objects.

glimpse() provides only column names, classes, and some data values (much more readable)

I will often use str() when I want more detailed information about data structure, but use glimpse() for quicker glances at the data.

Pipes

Another major convenience enhancement from the tidyverse is pipes, denoted %>%,

Pipes allow you to combine multiple steps into a single piece of code.

Specifically, after performing a function in one step, a pipe takes the data generated from the first step and uses it as the data input to a second step.

Pipes Example

barro.lee.data <- import("data/BL2013_MF1599_v2.1.dta") %>%

as_tibble() %>% glimpse(width = 50)## Observations: 1,898

## Variables: 20

## $ BLcode <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ country <chr> "Algeria", "Algeria", "Alge…

## $ year <dbl> 1950, 1955, 1960, 1965, 197…

## $ sex <chr> "MF", "MF", "MF", "MF", "MF…

## $ agefrom <dbl> 15, 15, 15, 15, 15, 15, 15,…

## $ ageto <dbl> 999, 999, 999, 999, 999, 99…

## $ lu <dbl> 80.68459, 81.05096, 82.6111…

## $ lp <dbl> 17.563400, 17.018442, 14.31…

## $ lpc <dbl> 3.745905, 3.464397, 3.06939…

## $ ls <dbl> 1.454129, 1.639253, 2.75251…

## $ lsc <dbl> 0.4595877, 0.4952279, 1.049…

## $ lh <dbl> 0.2978759, 0.2594140, 0.322…

## $ lhc <dbl> 0.16479027, 0.14177565, 0.1…

## $ yr_sch <dbl> 0.8464569, 0.8350149, 0.880…

## $ yr_sch_pri <dbl> 0.7443995, 0.7284052, 0.706…

## $ yr_sch_sec <dbl> 0.09280412, 0.09858591, 0.1…

## $ yr_sch_ter <dbl> 0.009253278, 0.008023791, 0…

## $ pop <dbl> 5241, 5699, 6073, 6374, 710…

## $ WBcode <chr> "DZA", "DZA", "DZA", "DZA",…

## $ region_code <chr> "Middle East and North Afri…

Data Preparation

Tidyverse and the verbs of data manipulation

A motivating principle behind the creation of the tidyverse was the language of programming should really behave like a language.

Data manipulation in the tidyverse is oriented around a few key “verbs” that perform common types of data manipulation.

- filter() subsets the rows of a data frame based on their values.

- select() keeps variables (columns) based on their names.

- mutate() adds new variables that are functions of existing variables.

- summarize() creates a number of summary statistics out of many values.

- arrange() changes the ordering of the rows.

Note: the first argument for each these functions is the data object (so pipe!).

Filtering data

Filtering keeps observations (rows) based on conditions.

- Just like using use subset conditions in the row arguments of a bracketed subset

# Using brackets

wages[(wages$schooling > 10) & (wages$exper > 10),] ## wage schooling sex exper

## 2 249.6774 13 female 11# Using filter

wages %>% filter(schooling > 10,exper > 10) ## wage schooling sex exper

## 1 249.6774 13 female 11Notice a couple of things about the output:

- It doesn’t look like we told filter() what data set we would be filtering.

- That’s because the data set has already been supplied by the pipe. We could have also written the filter as:

filter(wages, schooling > 10,exper > 10) ## wage schooling sex exper

## 1 249.6774 13 female 11- We didn’t need to use the logical &. Though multiple conditions can still be written in this way with filter(), the default is just to separate them with a comma.

Selecting data

Just like filter is in many ways a more convenient form of writing out bracketed row subset conditions, the verb select() is largely a more convenient method for writing column arguments.

# Using brackets

wages_row1[,c("wage","schooling","exper")]## wage schooling exper

## 1 134.2306 13 8# Using select

wages_row1 %>% select(wage,schooling,exper) ## wage schooling exper

## 1 134.2306 13 8An example of dropping a column

One option we have not covered so far in creating subsets is dropping rows or columns.

R has a specific notation for this, easily used with select():

wages_row1 # What wages_row1 looks like:## wage schooling sex exper

## 1 134.2306 13 female 8wages_row1 %>% select(-exper) #drop exper## wage schooling sex

## 1 134.2306 13 femaleDropping columns (or rows) using the - notation also works with brackets, but only when using the number location of the row or column to be dropped.

wages_row1[,-4] # works## wage schooling sex

## 1 134.2306 13 female# wages_row1[,-"exper"] does not workBecause of the ability to use name arguments, dropping with select() is generally easier.

“Mutating” data

Creating new variables that are functions of existing variables in a data set can be done with mutate().

mutate() takes as its first argument the data set to be used and the equation for the new variable:

wages <- wages %>%

mutate(expsq = exper^2) # Create expersq

wages # Display wages## wage schooling sex exper expsq

## 1 134.23058 13 female 8 64

## 2 249.67744 13 female 11 121

## 3 53.56478 10 female 11 121Summarizing data

Summary statistics can also be easily created using the tidyverse function summarize()

The summarize functions uses summary statistic functions in R to create a new summary tibble, with syntax largely identical to mutate().

Let’s try summarizing with the mean() summary statistic.

wages %>%

summarize(avg_wage = mean(wage))## avg_wage

## 1 145.8243Summary Statistics functions in R

There are a number of summary statistics available in R, which can be used either with the summarize() command or outside of it:

Measures of central tendency and spread:

Position:

Count:

Multiple summary variables

Let’s look at an example of using multiple summary variables with a larger 50-observation sample for the wages data set.

wages %>%

summarize(avg.wage = mean(wage), sd.wage = sd(wage),

avg.exper = mean(exper), sd.exper = sd(exper))## # A tibble: 1 x 4

## avg.wage sd.wage avg.exper sd.exper

## <dbl> <dbl> <dbl> <dbl>

## 1 5942. 17526. 7.47 2.08Grouping data

Creating summary statistics by group is another routine task. This is accommodated in the tidyverse using the group_by().

- The arguments of group_by(), in addition to the data set, are simply the grouping variables separated by commas.

wages %>% group_by(sex) %>%

summarize(avg.wage = mean(wage), sd.wage = sd(wage))## # A tibble: 2 x 3

## sex avg.wage sd.wage

## <fct> <dbl> <dbl>

## 1 female 5473. 18883.

## 2 male 6410. 16711.Arranging (sorting) data

If you want to sort your data by the values of a particular variable, you can easily do so as well with the arrange()

function.

wages[1:3,] %>% arrange(exper)## # A tibble: 3 x 4

## wage schooling sex exper

## <dbl> <int> <fct> <int>

## 1 175. 12 female 5

## 2 103. 11 male 7

## 3 1411. 14 female 8Not: arrange() sorts values in ascending order by default. If you want to sort in descending order, wrap the variable name inside desc() in the function.

Sampling from data

Creating a sample from a data set in R is made easy by two main function in R: sample_n and sample_frac.

Syntax:

sample_n(data, size, replace = FALSE/TRUE)

sample_frac(data, size = 1, replace = FALSE/TRUE)

A data prep example with fuel economy data

Let’s use tidyverse data manipulation verbs to work through a practical data prep problem from start to finish.

For the problem, Let’s use fuel economy data again, but with half of the data set. The data comes from the vehicles data set in the fueleconomy package.

# install.packages("fueleconomy") # Run only once

library(fueleconomy)Now let’s look at how fuel efficiency has changed over time in the data set. Specifically, let’s create descriptive statistics of fuel efficiency by year for “normal” passenger vehicles (4-8 cylinders).

What’s in the data set?

glimpse(vehicles[2:12], width=50)## Observations: 33,442

## Variables: 11

## $ make <chr> "AM General", "AM General", "AM G…

## $ model <chr> "DJ Po Vehicle 2WD", "DJ Po Vehic…

## $ year <int> 1984, 1984, 1984, 1984, 1985, 198…

## $ class <chr> "Special Purpose Vehicle 2WD", "S…

## $ trans <chr> "Automatic 3-spd", "Automatic 3-s…

## $ drive <chr> "2-Wheel Drive", "2-Wheel Drive",…

## $ cyl <int> 4, 4, 6, 6, 4, 6, 6, 4, 4, 6, 4, …

## $ displ <dbl> 2.5, 2.5, 4.2, 4.2, 2.5, 4.2, 3.8…

## $ fuel <chr> "Regular", "Regular", "Regular", …

## $ hwy <int> 17, 17, 13, 13, 17, 13, 21, 26, 2…

## $ cty <int> 18, 18, 13, 13, 16, 13, 14, 20, 2…Create summary tibble

annual.mpg <- vehicles %>% sample_frac(0.5) %>%

filter(cyl %in% 4:8) %>% group_by(year) %>%

summarize(hwy.avg = mean(hwy), hwy.sd = sd(hwy),

city.avg = mean(cty), city.sd = sd(cty)) %>%

arrange(desc(city.avg))Note: Here I used %in%, which works like inrange in Stata. You could alternately write two inequalities to achieve the same thing.

# Print annual.mpg

annual.mpg## # A tibble: 32 x 5

## year hwy.avg hwy.sd city.avg city.sd

## <int> <dbl> <dbl> <dbl> <dbl>

## 1 2015 28.6 5.42 20.6 4.78

## 2 2014 27.8 6.44 20.4 5.76

## 3 2013 27.3 6.02 20.0 5.78

## 4 2012 26.3 5.83 19.2 5.21

## 5 2011 25.7 5.36 18.8 4.86

## 6 2010 25.3 4.90 18.4 4.38

## 7 1985 22.8 6.21 17.7 4.70

## 8 2009 24.2 4.54 17.6 3.92

## 9 1986 22.4 5.82 17.5 4.48

## 10 1997 23.6 5.11 17.2 3.76

## # … with 22 more rowsSummarizing a data set with the summary() function

Although the tidyverse summarize() function is more powerful, often you just a want a quick look at summary statistics for the whole data set.

- You can easily do this with the base R summary() function, which produces summaries not just for data sets, but also for other R output like the results of a regression.

summary(wages)## wage schooling sex exper

## Min. : 1.69 Min. : 8 female:15 Min. : 3.000

## 1st Qu.: 44.07 1st Qu.:11 male :15 1st Qu.: 6.250

## Median : 160.96 Median :12 Median : 7.500

## Mean : 5941.66 Mean :12 Mean : 7.467

## 3rd Qu.: 1519.01 3rd Qu.:13 3rd Qu.: 8.000

## Max. :73659.06 Max. :15 Max. :13.000

Cleaning data

Common data cleaning tasks

There are a few data cleaning tasks that are pervasive in empirical work:

- Ensure columns have useful names

- Recoding variable values

- Addressing missing values

Renaming columns

Renaming columns is easily accommodated with the tidyverse rename() command.

Syntax:

mydataframe <- mydataframe %>% rename(NewVarName = OldVarName)To see rename() in action, let’s go back to the barro.lee.data educational data set we imported earlier:

Renaming columns example

Let’s look at columns 1 and 7 through 9:

glimpse(barro.lee.data[,c(1,7:9)], width = 50)## Observations: 1,898

## Variables: 4

## $ BLcode <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ lu <dbl> 80.68459, 81.05096, 82.61115, 80…

## $ lp <dbl> 17.563400, 17.018442, 14.313748,…

## $ lpc <dbl> 3.745905, 3.464397, 3.069391, 4.…See how these variable names are uninformative? Let’s use str() now.

str(barro.lee.data[,c(1,7:9)])## Classes 'tbl_df', 'tbl' and 'data.frame': 1898 obs. of 4 variables:

## $ BLcode: num 1 1 1 1 1 1 1 1 1 1 ...

## ..- attr(*, "label")= chr "Country Code"

## ..- attr(*, "format.stata")= chr "%8.0g"

## $ lu : num 80.7 81.1 82.6 80.9 73.6 ...

## ..- attr(*, "label")= chr "Percentage of No Schooling"

## ..- attr(*, "format.stata")= chr "%7.2f"

## $ lp : num 17.6 17 14.3 14.4 19.2 ...

## ..- attr(*, "label")= chr "Percentage of Primary"

## ..- attr(*, "format.stata")= chr "%7.2f"

## $ lpc : num 3.75 3.46 3.07 4.01 5.23 ...

## ..- attr(*, "label")= chr "Percentage of Primary Complete"

## ..- attr(*, "format.stata")= chr "%7.2f"Although the column names here are uninformative, the imported SAS file included variable labels saved as attributes, which can be seen using the more verbose str() function.

barro.lee.data <- barro.lee.data %>%

rename(countrycode = BLcode,

perc.noschool = lu,

perc.primary = lp,

perc.primary.complete = lpc)Now let’s look at the variable names again:

glimpse(barro.lee.data[,c(1,7:9)], width = 50)## Observations: 1,898

## Variables: 4

## $ countrycode <dbl> 1, 1, 1, 1, 1, 1,…

## $ perc.noschool <dbl> 80.68459, 81.0509…

## $ perc.primary <dbl> 17.563400, 17.018…

## $ perc.primary.complete <dbl> 3.745905, 3.46439…Recoding variables

Along with renaming variables, recoding variables is another integral part of data wrangling.

wages[1:4,"sex"] # Look at sex column## # A tibble: 4 x 1

## sex

## <fct>

## 1 female

## 2 female

## 3 male

## 4 malewages$sex <- wages$sex %>% recode("male"=0,

"female"=1) # recode

wages[1:4,"sex"] # Look at sex column## # A tibble: 4 x 1

## sex

## <dbl>

## 1 1

## 2 1

## 3 0

## 4 0Missing Values

Another problem characteristic of observational data is missing data. In R, the way to represent missing data is with the value NA.

- You can recode missing value that should be NA but are code using a different schema either by using brackets, or the tidyverse na_if() function.

## Replace 99-denoted missing data with NA

# bracket method

wages[wages$schooling==99,] <- NA

# tidyverse method

wages$schooling <- wages$schooling %>% na_if(99) You can check for (correctly-coded) missing-values using the is.na() function.

## Missing

wages[is.na(wages$wage),]## # A tibble: 3 x 4

## wage schooling sex exper

## <dbl> <int> <dbl> <int>

## 1 NA 14 1 8

## 2 NA 11 0 8

## 3 NA 10 0 8Note: R does not naturally support multiple types of missingness like other languages, although it’s possible to use the sjmisc package to do this.

Tidy data

Principles of tidy data

Rules for tidy data (from R for Data Science):

- Each variable must have its own column.

- Each observation must have its own row.

- Each value must have its own cell.

Tidy data tools in the tidyverse

There two main tidyverse verbs for making data tidy are:

gather(): reduces variable values are spread over multiples columns into a single column.

spread(): when multiple variables values are stored in the same columns, moves each variable into it’s own column.

Gathering data

If values for a single variable are spread across multiple columns (e.g. income for different years), gather moves this into single “values” column with a “key” column to identify what the different columns differentiated.

Syntax:

gather(data, key, value, columnstocombine)Gather example

earnings.panel## # A tibble: 7 x 3

## person y1999 y2000

## <chr> <dbl> <dbl>

## 1 Elsa 10 15

## 2 Mickey 20 28

## 3 Ariel 17 21

## 4 Gaston 19 19

## 5 Jasmine 32 35

## 6 Peter 22 29

## 7 Alice 11 15earnings.panel <- earnings.panel %>%

gather(key="year", value="wage",y1999:y2000)

earnings.panel## # A tibble: 14 x 3

## person year wage

## <chr> <chr> <dbl>

## 1 Elsa y1999 10

## 2 Mickey y1999 20

## 3 Ariel y1999 17

## 4 Gaston y1999 19

## 5 Jasmine y1999 32

## 6 Peter y1999 22

## 7 Alice y1999 11

## 8 Elsa y2000 15

## 9 Mickey y2000 28

## 10 Ariel y2000 21

## 11 Gaston y2000 19

## 12 Jasmine y2000 35

## 13 Peter y2000 29

## 14 Alice y2000 15Spreading data

Spread tackles the other major problem - that often times (particularly in longitudinal data) many variables are condensed into just a “key” (or indicator) column and a value column.

wages2## person indicator values

## 1 Elsa wage NA

## 2 Mickey wage 174.932480

## 3 Ariel wage 102.668810

## 4 Gaston wage 1.690623

## 5 Jasmine wage 2.166231

## 6 Peter wage 1192.371925

## 7 Alice wage 83.363705

## 8 Elsa wage NA

## 9 Mickey wage 174.932480

## 10 Ariel wage 102.668810

## 11 Gaston wage 1.690623

## 12 Jasmine wage 2.166231

## 13 Peter wage 1192.371925

## 14 Alice wage 83.363705

## 15 Elsa schooling 14.000000

## 16 Mickey schooling 12.000000

## 17 Ariel schooling 11.000000

## 18 Gaston schooling 11.000000

## 19 Jasmine schooling 14.000000

## 20 Peter schooling 12.000000

## 21 Alice schooling 11.000000

## 22 Elsa schooling 14.000000

## 23 Mickey schooling 12.000000

## 24 Ariel schooling 11.000000

## 25 Gaston schooling 11.000000

## 26 Jasmine schooling 14.000000

## 27 Peter schooling 12.000000

## 28 Alice schooling 11.000000

## 29 Elsa exper 8.000000

## 30 Mickey exper 5.000000

## 31 Ariel exper 7.000000

## 32 Gaston exper 8.000000

## 33 Jasmine exper 10.000000

## 34 Peter exper 8.000000

## 35 Alice exper 6.000000

## 36 Elsa exper 8.000000

## 37 Mickey exper 5.000000

## 38 Ariel exper 7.000000

## 39 Gaston exper 8.000000

## 40 Jasmine exper 10.000000

## 41 Peter exper 8.000000

## 42 Alice exper 6.000000wages2 %>% spread("indicator", "values")## person wage schooling exper

## 1 Elsa NA 14 8

## 2 Mickey 174.932480 12 5

## 3 Ariel 102.668810 11 7

## 4 Gaston 1.690623 11 8

## 5 Jasmine 2.166231 14 10

## 6 Peter 1192.371925 12 8

## 7 Alice 83.363705 11 6

## 8 Elsa NA 14 8

## 9 Mickey 174.932480 12 5

## 10 Ariel 102.668810 11 7

## 11 Gaston 1.690623 11 8

## 12 Jasmine 2.166231 14 10

## 13 Peter 1192.371925 12 8

## 14 Alice 83.363705 11 6

Loops, conditionals, joins, and more

Revisiting basics

Assignment Operator

So far, when changing a data object, we have always been a bit repetitive:

mydataframe <- mydataframe %>% rename(NewVarName = OldVarName)Along with the standard pipe (%>%), by loading the magrittr package, you can also use the so-called “assignment pipe” (%<>%).

- The above rename with the assignment pipe appears as:

mydataframe %<>% rename(NewVarName = OldVarName)Lists

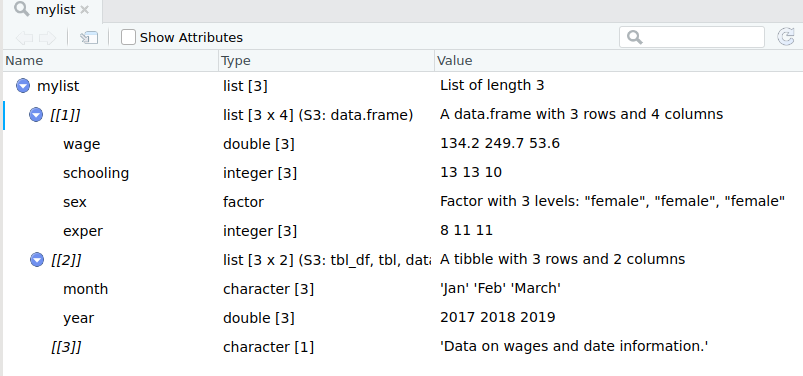

Another subtlety glossed over so far are lists.

As mentioned in module 1, vectors come in two forms: atomic vectors (with a single data type) and lists (with heterogenous data types).

Lists can take as inputs not only single-valued elements, but also vectors or data frames.

Creating a list from other objects is done with the list() function. The syntax of list is:

List Creation Example

wages_df; date_df; description## wage schooling sex exper

## 1 134.23058 13 female 8

## 2 249.67744 13 female 11

## 3 53.56478 10 female 11## month year

## 1 Jan 2017

## 2 Feb 2018

## 3 March 2019## [1] "Data on wages and date information."mylist <- list(wages = wages_df, dates = date_df, description)Where wages_df and date_df are data frames and description is a single character element.

List Creation Example ctd

Subsetting a list

To subset a vector/matrix/data frame, one uses single brackets, eg mydf[,].

To refer to an object of a list, use double brackets.

mylist[[3]]## [1] "Data on wages and date information."Note: The function list() does not take transfer the names of the data frames, so you will need to either subset by position or assign names to the list objects.

Extracting a list

An easy way of extracting an object from a list is with the extract2() function from magrittr. This allows you to extra a given list object by name or position.

wage_data <- mylist %>% extract2(1)

wage_data## wage schooling sex exper

## 1 134.23058 13 female 8

## 2 249.67744 13 female 11

## 3 53.56478 10 female 11The unlist function

Instead of creating more complicated data objects, sometimes formatted as list into a simple (atomic) vector. The unlist() function does this.

Example:

simple_list <-list(1,2,3,4)

str(simple_list)## List of 4

## $ : num 1

## $ : num 2

## $ : num 3

## $ : num 4simple_list %<>% unlist() %>% str()## num [1:4] 1 2 3 4

Iteration

For loops

For tasks that you want to iterate over multiple data frames/variables/elements, you may want to think about creating a loop.

- A loop performs a function/functions multiple times, across either a list of objects or a set of index values.

Syntax:

for(indexname in range) {

do stuff

}For loop across numeric values

for (i in 1:4){

print(i^2)

}## [1] 1

## [1] 4

## [1] 9

## [1] 16For loop across named elements

You can also loop over elements instead of values.

- In the last module exercises, you had to convert the type of many variables. Here’s one way you could do that with a loop:

nlsy97 <- import("./data/nlsy97.rds")

factor.vars <- c("personid","year","sex","race","region","schooltype")

for (i in factor.vars){

nlsy97[,i] %<>% unlist() %>% as.factor()

}

The map() function

For iterations over vectors and dataframes, the map() function is a great alternative to the for loop.

Map functions take a user-supplied function and iterate it over:

Elements for a vector

Objects of a list

Columns of a data frame

Map functions are much simpler to write than loops and are also generally a good bit faster.

- Sidenote: Map is a part of the tidyverse collection of packages. In base R, the apply() family of functions does roughly the same thing, but map() simplifies and improves this task.

Using the map() function

Syntax:

map(data, fxn, option1, option2...)Example:

nlsy97[,factor.vars] %<>% map(as.factor) Using class-specific map variants

There are multiple map variants that enforce a given data type on results. You should use these whenever you want output of a certain class.

map_lgl for logical vector

map_dbl for numeric vector

map_chr for character vector

map_df for a data frame

Example of difference with class-specific map variants

nlsy.sub <- nlsy97 %>% select(parentincome, motheredyrs, gpa)

nlsy.sub %>% map_dbl(IQR, na.rm=TRUE)## parentincome motheredyrs gpa

## 55947.2500 3.0000 2.8075nlsy.sub %>% map(IQR, na.rm=TRUE)## $parentincome

## [1] 55947.25

##

## $motheredyrs

## [1] 3

##

## $gpa

## [1] 2.8075Using map() with anonymous functions

map() works with not only predefined functions, but also “anonymous functions”— unnamed functions defined inside of map().

- Suppose I want the z-standardized values of the variables from the previous example:

# Create Z Transform

ztransform <- map_df(nlsy.sub, function(x)

(x - mean(x, na.rm=TRUE)) / sd(x, na.rm=TRUE)

)

### Did it work?

# Means

map_dbl(ztransform, function(x)

round(mean(x, na.rm=TRUE),10))## parentincome motheredyrs gpa

## 0 0 0# Standard deviations

map_dbl(ztransform, function(x)

round(sd(x, na.rm=TRUE),10))## parentincome motheredyrs gpa

## 1 1 1

Conditional Statements

If statements

“If statements” are also a useful part of programming, either in conjunction with iteration or seperately.

- An if statement performs operations only if a specified condition is met.

- An important thing to know, however, is that if statements evaluate conditions of length one (ie non-vector arguments).

- We will cover a vector equivalent to the if statement shortly.

Syntax

if(condition){

do stuff

}Example of an if statement

In the for loop example, the loop was indexed over only the columns of indicator codes.

Equally, the loop could be done over all columns with an if-statement to change only the indicator codes.

for (j in colnames(nlsy97)){

if(j %in% factor.vars){

nlsy97[,j] %<>% unlist() %>% as.factor()

}

}Multiple conditions

You can encompass several conditions using the else if and catch-all else control statements.

if (condition1) {

do stuff

} else if (condition2) {

do other stuff

} else {

do other other stuff

}Vectorized if statements

As alluded to earlier, if statements can’t test-and-do for vectors, but only single-valued objects.

Most of the time, you probably want to use conditional statements on vectors. The vector equivalent to the if statement is ifelse()

Syntax:

ifelse(condition, true_statement, false_statement)The statements returned can be simple values, but they can also be functions or even further conditions. You can easily nest multiple ifelses if desired.

An ifelse example

numbers <- sample(1:30, 7); numbers## [1] 29 11 13 22 27 12 30 ifelse(numbers %% 2 == 0,"even","odd")## [1] "odd" "odd" "odd" "even" "odd" "even" "even"Note: What if we tried a normal if statement instead?

if(numbers %% 2 == 0){

print("even")} else{

print("odd")}## [1] "odd"Multiple vectorized if statements

A better alternative to multiple nested ifelse statements is the tidyverse case_when function.

Syntax:

case_when(

condition1 ~ statement1,

condition2 ~ statement2,

condition3 ~ statement3,

)A case_when example

nums_df <- numbers %>% as.tibble() %>%

mutate(interval = case_when(

(numbers > 0 & numbers <= 10) ~ "1-10",

(numbers > 10 & numbers <= 20) ~ "10-20",

(numbers > 20 & numbers <= 30) ~ "20-30"))

nums_df[1:4,]## # A tibble: 4 x 2

## value interval

## <int> <chr>

## 1 29 20-30

## 2 11 10-20

## 3 13 10-20

## 4 22 20-30

Functions

When you should write a function

If you find yourself performing the same specific steps more than a couple of times (perhaps with slight variations), then you should consider writing a function.

A function can serve essentially as a wrapper for a series of steps, where you define generalized inputs/arguments.

Writing a function

Ingredients:

Function name

Arguments

Function body

Syntax:

function_name <- function(arg1, arg2, ...){

do stuff

}Function example

Let’s turn the calculation of even or odd that was completed earlier into a function:

# Make odd function

odd <- function(obj){

ifelse(obj %% 2 == 0,"even","odd")

}Notice that obj here is a descriptive placeholder name for the data object to be supplied as an argument for the function.

odd(numbers)## [1] "odd" "odd" "odd" "even" "odd" "even" "even"RStudio’s “Extract Function”

A useful way of writing simple functions when you’ve already written the code for a specific instance is to use RStudio’s Extract Function option, which is available from the code menu.

- Extract function will take the code chunk and treat any data objects referenced but not created within the chunk as function arguments.

Joins

Merging data

Shifting gears from programming…

Another staple task in applied work is combining data from multiple data sets. The tidyverse set of packages includes several useful types of merges (or “joins”):

left_join() Appends columns from dataset B to dataset A, keeping all observations in dataset A.

inner_join() Appends columns together, keeping only observations that appear in both dataset A and B.

semi_join() Keeps only columns of dataset A for observations that appear in both dataset A and B.

anti_join() Keeps only columns of dataset A for observations that do not appear in both dataset A and B.

Joining using keys

The starting point for any merge is to enumerate the column or columns that uniquely identify observations in the dataset.

For cross-sectional data, this might be a personal identifier or (for aggregate data) something like municipality, state, country, etc.

For panel data, this will typically be both the personal/group identifier and a timing variable, for example Sweden in 2015 in a cross-country analysis.

Mismatched key names across datasets

Sometimes the names of the key variables are different across datasets.

You could of course rename the key variables to be consistent.

But mismatched key names are easily handled by the tidyverse join functions.

Syntax:

join_function(x, y, by = c("x_name" = "y_name"))left_join

The left_join() is the most frequent type of join, corresponding to a standard merge in Stata.

- left_join simply appends additional variables from a second dataset to a main dataset, keeping all the observations (rows) of the first dataset.

Syntax:

left_join(x, y, by = "key")If the key is muliple columns, use c() to list them.

left_join example

# Look at the datasets

earnings## person_id wage

## 1 001 150

## 2 002 90

## 3 003 270educ## person_id schooling

## 1 001 12

## 2 003 8

## 3 004 16# Combine data

combined_data <- left_join(earnings, educ,

by="person_id")

# Print data

combined_data## person_id wage schooling

## 1 001 150 12

## 2 002 90 NA

## 3 003 270 8Notice that schooling is equal to NA for person ‘002’ because that person does not appear in the educ dataset.

inner_join

If you want to combine the variables of two data sets, but only keep the observations present in both datasets, use the inner_join() function.

combined_data <- inner_join(earnings, educ,

by="person_id")

combined_data## person_id wage schooling

## 1 001 150 12

## 2 003 270 8semi_join

To keep using only the variables in the first dataset, but where observations in the first dataset are matched in the second dataset, use semi_join().

- semi_join is an example of a filtering join. Filtering joins don’t add new columns, but instead just filter observations for matches in a second dataset.

- left_join and inner_join are instead known as mutating joins, because new variables are added to the dataset.

filtered_data <- semi_join(earnings, educ, by="person_id")

filtered_data## person_id wage

## 1 001 150

## 2 003 270anti_join

Another filtering join is anti_join(), which filters for observations that are not matched in a second dataset.

filtered_data <- anti_join(earnings, educ,

by="person_id")

filtered_data## person_id wage

## 1 002 90There are still other join types, which you can read about here.

Appending data

Finally, instead of joining different datasets for the same individuals, sometimes you want to join together files that are for different individuals within the same dataset.

- When join data where the variables for each dataset are the same, but the observations are different, this is called appending data.

The function for appending data in the tidyverse is:

bind_rows(list(dataframe1,dataframe2,...))

Manipulating text

Concatenating strings

The last type of data preparation that we will cover in this course is manipulating string data.

The simplest string manipulation may be concatenating (ie combining) strings.

- A great function for combining string in R is the glue() function, part of the Tiydverse glue package.

The glue function lets you reference variable values inside of text strings by writing the variable in curly brackets {} inside of the string.

Glue Example

date_df %<>% mutate(

say.month = glue("The month is {month}"),

mo.yr = glue("{month} {year}")

)

date_df## month year say.month mo.yr

## 1 Jan 2017 The month is Jan Jan 2017

## 2 Feb 2018 The month is Feb Feb 2018

## 3 March 2019 The month is March March 2019Glue Example 2

numbers <- c(1,2,3)

for (i in numbers){

print(glue("The magic number is {i}"))

}## The magic number is 1

## The magic number is 2

## The magic number is 3Extracting and replacing parts of a string

Other common string manipulating tasks include extracting or replacing parts of a string. These are accomplished via the str_extract() and str_replace() from the Tidyverse stringr package.

- We saw examples of these two functions in the last seminar exercise:

The arguments for each function are:

str_extract(string_object, "pattern_to_match")

str_replace(string_object, "pattern_to_match","replacement_text")By default, both function operate on the first match of the specified pattern. To operate on all matchs, add “_all" to the function name, as in:

str_extract_all(string_object, "pattern_to_match")Extract and replace example

In the last seminar, we created a “year” column from years indicated in the “variable” column text via the expression:

nlsy97$year <- str_extract(nlsy97$variable, "[0-9]+")After creating the “year” column, we then removed the year values from the values of the “variable” column by replacing these numbers with an empty string.

nlsy97$variable <- str_replace(nlsy97$variable, "[0-9]+","")Trimming a string

When working with formatted text, a third common task is to remove extra spaces before or after the string text.

- This is done with the str_trim() function. The syntax is:

str_trim(string, side = "left"/"right"/"both")Note, when printing a string, any formatting characters are shown. To view how the string looks formatted, use the ViewLines() function.

Using regular expressions with strings

Often we want to modify strings based on a pattern rather than an exact expression, as seen with the str_extract() and str_replace() examples.

Patterns are specified in R (as in many other languages) using a syntax known as “regular expressions” or regex.

Today, we will very briefly introduce some regular expressions.

Common Expressions

- To match “one of” several elements, refer to them in square brackets, eg: [abc]

- To match one of a range of values, use a hyphen to indicate the range: e.g. [a-z],[0-9]

- To match either of a couple of patterns/expressions, use the OR operator, eg: “2017|2018”

There are also abbreviation for one of specific types of characters

eg: [:digit:] for numbers, [:alpha:] for letters, [:punct:] for punctuation, and \(\textbf{.}\) for every character.

See the RStudio cheat sheet on stringr for more examples (and in general, as a brilliant reference to regex)

How many times to match?

Aside from specifiying the characters to match, such as “[0-9]”, another important component of regular expressions is how many time should the characters appear.

- “[0-9]” will match any part of a string composed of exactly 1 number.

- “[0-9]+” will match any part of a string composed of 1 or more numbers.

- “[0-9]{4}” will match any part of a string composed of exactly 4 numbers.

- “[0-9]*" will match any part of a string composed of zero or more numbers.

Examples with repetition

Suppose we want to extract year data that is mixed in with other data as well.

messy_var <- c(1,1987,2006,2010,307,2018)

str_extract(messy_var, "[0-9]")## [1] "1" "1" "2" "2" "3" "2"str_extract(messy_var, "[0-9]+")## [1] "1" "1987" "2006" "2010" "307" "2018"str_extract(messy_var, "[0-9]{4}")## [1] NA "1987" "2006" "2010" NA "2018"Escaping special characters

Often, special characters can cause problems when working with strings. For example, trying to add a quote can result in R thinking you are trying to close the string.

For most characters, you can “escape” (cause R to read as part of the string) special characters by prepending them with a backslash.

Example:

quote <- "\"Without data, you're just another person with an opinion.\"

- W. Edwards Deming."

writeLines(quote)## "Without data, you're just another person with an opinion."

## - W. Edwards Deming.Matching strings that precede or follow specific patterns

To match part of a string that occurs before or after a specific other pattern, you can also specify “lookarounds”, the pattern the match should precede or follow:

To match a string pattern x, preceded or followed by y:

y precedes x: “(?<=y)x”

y follows x: “x(?=y)”

Look around example

price_info <-c("The price is 5 dollars")

str_extract(price_info, "(?<=(The price is )).+")## [1] "5 dollars"str_extract(price_info, ".+(?=( dollars))")## [1] "The price is 5"

Web Scraping

Web scraping with Rvest

“Scraping” data from the web - that is, automating the retrieval of data displayed online (other than through API) is an increasingly common data analysis task.

Today, we will briefly explore very rudimentary web scraping, using the rvest package.

The specific focus today is only on scraping data structued as a table on a webpage. The basic method highlighted will work much of the time - but does not work for every table.

Using rvest to scrape a table

The starting point for scraping a web table with rvest is the read_html() function, where the URL to the page with data should go.

After reading the webpage, the table should be parsed. For many tables, the read_html can be piped directly into the html_table() function.

- If this works, the data should then be converted from a list into a dataframe/tibble.

If html_table() does not work, a more robust option is to first pipe read_html into html_nodes(xpath = “//table”) and then into html_table(fill=TRUE)

- html_nodes(xpath = “//table”) looks for all HTML objects coded as a table, hence

Web scraping example

tech_stock_names <- c("MSFT","AMZN","GOOGL","AAPL","FB","INTC","CSCO")

tech_stocks <- list()

for(j in 1:length(tech_stock_names)){

tech_stocks[[j]] <-read_html(

glue("https://finance.yahoo.com/quote/{tech_stock_names[j]}/history")) %>%

html_table() %>% as.data.frame() %>% mutate(stock = tech_stock_names[j])

}

tech_stocks %<>% bind_rows()

tech_stocks[1:5,c(1,6:8)]## Date Adj.Close.. Volume stock

## 1 Mar 07, 2019 110.39 25,321,300 MSFT

## 2 Mar 06, 2019 111.75 17,687,000 MSFT

## 3 Mar 05, 2019 111.70 19,538,300 MSFT

## 4 Mar 04, 2019 112.26 26,608,000 MSFT

## 5 Mar 01, 2019 112.53 23,501,200 MSFTAnother webscraping example

gini_list <-read_html("http://wdi.worldbank.org/table/1.3") %>%

html_nodes(xpath ="//table") %>% html_table(fill=TRUE)

gini_data <- gini_list %>% extract2(3) %>%

as.data.frame() %>% select(1:3)

gini_data[1:5,]## X1 X2 X3

## 1 Afghanistan .. ..

## 2 Albania 2012 29.0

## 3 Algeria 2011 27.6

## 4 American Samoa .. ..

## 5 Andorra .. ..

Project management and dynamic documents

RMarkdown documents

Reproducible R Reports

So far, we have been working purely with basic “R Script” files, which are very similar to Stata do-files.

But thanks largely to the knitr package, you can easily create reports that interweaves text and R code in a neatly structured manner.

Output can be structured as PDF documents, HTML webpages, Word documents, or various presentation formats including Beamer (LaTex) presentations.

The course website, lecture slides, and exercise instructions have all been generated in R.

Getting started

Reports of different file formats are generated using the knitr package.

Before installing knitr, make sure sure that you have a Latex distribution installed.

Then install the knitr package and initialize it in the usual manner.

# Run only once (ie in the console)

# install.packages("knitr")

# Initialize library

library("knitr")Knitr and RMarkdown

Knitr allows for the creation of documents structured using two different typesetting languages:

LaTex with the .RNW file

Markdown (specifically RMarkdown), which was originally created as a simple language for structuring HTML markup.

For this course, we will focus on the RMarkdown format, which has become the dominant method for “knitting” document because of it’s lightweight and flexibility.

- More information about how to generate R reports using the Latex format can be found at https://rpubs.com/YaRrr/SweaveIntro.

Creating an RMarkdown document

After installing knitr, to create an RMarkdown document, go to File—New File—R Markdown.

A popup shows up to ask enter the document Title and Author, as well as what type of document you want to create.

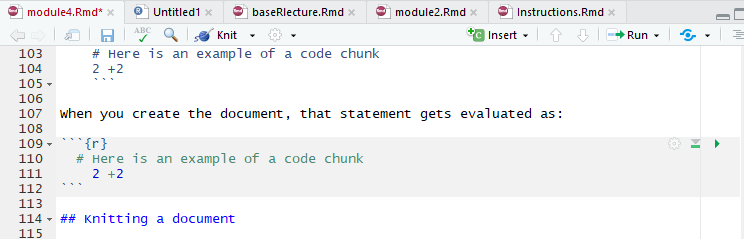

Writing and Code in RMarkdown

In RMarkdown, expository writing and code “chunks” are differentiated in writing code in specific code chunks.

```{r}

# Here is an example of a code chunk

2 +2

```When you create the document, that statement gets evaluated as:

# Here is an example of a code chunk

2 +2 ## [1] 4Inline Chunks

You can also include inline code by using initializing with a backtick and the letter r (no space between), writing the code, then closing the chunk with another backtick.

- For example:

`r 2+2`

Knitting a document

To generate a document in the desired output format from a RMarkdown document, you need to “Knit” the document, which appears as a clickable icon on the menu atop the script pane.

You do not need to Knit a document after every change, however. You can just as easily run the code chunks. There are specific specific buttons to run either the current chunk or all of the chunks above a given chunk.

Writing outside of code chunks

Anything not written inside of these bacticked sections is interpret as normal writing.

RMarkdown makes styling your writing particularly easy. Some common formatting options include:

- Headers: Headers are defined using hashes (#)

- A single # indicates a top level heading (and bigger font), while each additional hash indicates a smaller heading size

- So while # is the largest heading size, #### is a small heading

- Bold: To bold text, wrap it in two asterisks:

**Bold Statement** - Italics: To italicize text, wrap in a single asterisk:

*Italics Statement*

Lists and Latex Input

Latex input: Most LaTex commands (except for generally longer multi-line structures) can be included in RMarkdown documents just as you’d write them in Tex document.

Lists/Bullet Points: Like the bullet points here, you will often want to structure output using lists.

To create a bulleted list, start each bulleted line with a dash (-).

Make sure to leave an empty line between the start of the list and any other text.

To make an indent “sub-list”, start the sub-list with a plus sign (+) and use tab to indent the line twice for each of the sub-list items.

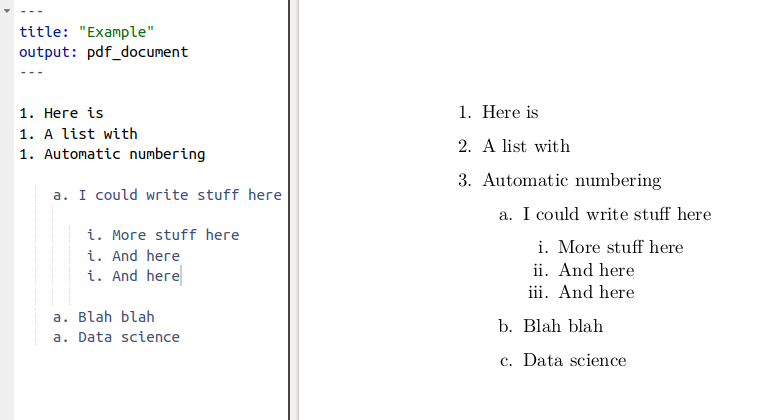

Ordered Lists

Ordered lists use the same indent rules as unordered lists, but with no dashes or plus signs.

- You can also generally uses automatic numbering by repeating the initial letter or number (e.g.)

Seperating Lines in RMarkdown

Something you might wonder is how to obey the RStudio 80-character margins while allowing your text to wrap normally in the generated documents.

The answer lies in how new lines are treated in RMarkdown documents.

- If the line ends with one space or less, a new line in RMarkdown will not be treated as a new line in the documents generated.

Code chunk options

There are several output options you can specify for how R code and the code output are expressed in reports. These options are expressed as options in the {r} declaration at the top of the chunk.

echo=FALSE: do not show the R code itself (but potentially the code output depending on other chunk options).

include=FALSE: do not show the R code or the output in the document.

eval=FALSE: do not actually execute the code inside the chunk, only display it.

results=“hide”: run the code but do not show the results output.

warning=FALSE / message=FALSE: do not show warnings or messages associated with the R code.

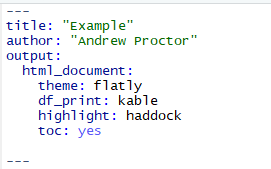

Output options

When a RMarkdown document is generated in a given output format, there are several common things you can do to customize the appearance of the document.

To add options to your document, indent the name of the output type to an indented new line and a colon to it. Then indent under the output type and add the desired options.

Before

After

Common output options

Here are a few common options:

table of contents: to include a table of contents in your document, use the toc: yes option.

To change the way data frame output is printed, use the df_print option. Good options are kable or tibble.

To add code highlighting for R chunks, use the highlight: option.

- Options include: default, tango, pygments, kate, monochrome, espresso, zenburn, haddock, and textmate.

You can also specify output themes for html documents and beamer presentations. For html documents, possible themes are listed here while beamer themes are typically supplied by .sty files in your project folder.

Working Directories in RMarkdown

In RMarkdown documents, the working directory is automatically set to the folder in which the RMarkdown document is saved.

From there, you can use relative file paths. If data etc is in the root of the project folder, then just refer to the file name directly.

If data is in a subfolder, eg data, use a relative path like:

import("./assets/mydata.rds"")R Notebooks

Aside from the standard RMarkdown documents that we’ve covered so far, another format worth mentioning is the R Notebook format.

R Notebooks essentially adapt html RMarkdown documents to be even more similar to something like Jupyter Notebooks.

With R Notebooks, you can Preview documents without knitting them over again.

The document also generally has the Notebook-style code-output-code layout.

RStudio Projects

Projects Intro

In addition to using RMarkdown documents to make your scripts and reports more compelling, another process upgrade is using RStudio Projects.

Projects are useful because they:

- Define a project root folder

- Save a RStudio environment that is unique to each project

- Allow for easy version control

Working folder benefits of a Project

A project root folder is not only preferable to the need to use setwd(), but also to the default working directory used in RMarkdown documents outside of R Projects. Why?

Because for substantial research projects, you likely will have a lot of files that you split into different subfolders, one of which is probably something like code.

- In this case, you’d need to use somewhat convoluted relative file paths to indicate that the paths should be from the parent folder of code.

Using RStudio Projects

To create a RStudio Project, go to File – New Project. From there, you can choose whether to create a new directory, use an existing directory, or use a Version Control repository.

In practice, I’d suggest you use either a New Directory or Version Control depending on whether or not you want to sync your scripts to GitHub.

- We’ll go over version control shortly.

Once you have created a Project, you can either open it from the File Menu or by opening the .RProj file in the project directory root.

Project workflow structure

Sample Folder Structure:

- code/

- data_prep/

- analysis/

- data/

- raw_data/

- derived_data/

- docs/

- report/

- presentation/

- images/

- results/

- tables/

- figures/

Some workflow management packages:

Reproducibility and the R environment

A concern with any type of analysis project is that over time, the analysis environment can change – making it harder to reproduce results.

- The most common concern is that packages may change or become obsolete

- But also the program itself (R) can change, the OS can change, etc. All potentially leading to the inability to reproduce results.

Managing the R environment

- A solution to evolving package ecosystems built-in to R Projects is packrat.

- packrat can create a package library specific to the individual project.

- A more robust reproducibility solution is with Docker, which creates “containers” in which not only packages are fixed, but also the software (and even the virtual machine).

Version Control

What is version control?

Version control is a means to track changes made to files

Version control allows you to:

- See a history of every change made to files -Annotate changes -Allow you to revert files to previous versions

Local Version Control with Git

The most popular software for managing version control is Git.

There’s a good chance you’ve at least seen GitHub before, which is an online remote location for storing version control repositories.

The Git software, however, is equally adept at managing version control on your local computer.

Once Git is installed (and recognized in RStudio), you can use Projects to perform local version control.

In File – New Project – New Directory, once you have Git installed there is a checkbox that can be selected to enable to “Create a Git repository”.

A repository is a location that Git uses to track the changes of a file or folder, here the project folder. The Git repository is stored as a folder named “.git” in the project root.

Creating a Project in this manner with a Git repository will enable version control on your local computer.

Remote version control with GitHub

In addition to local version control, you can also back up the history of file changes to online repositories using GitHub.

GitHub connects to the local Git repository on your computer, “pushing” and “pulling” changes between the local and remote repositories.

This also allows for easy collaboration on coding projects, as multiple person can sync to files by connecting to the remote repository.

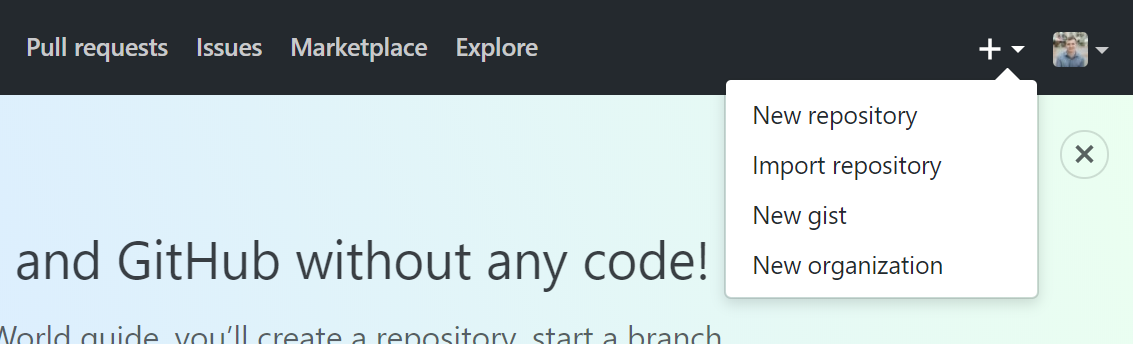

Using GitHub for remote version control

With a GitHub account, you can create a new online repository by clicking the “+” icon in the top right of a GitHub page, and then clicking “New Repository”.

Setting up a new repository

From there, you need to:

Supply GitHub with a repository name (think folder name)

Choose whether or not the repository should be public or private (ie whether or not you want other people to be able to visit your GitHub page and view the repository).

- If you have a GitHub education account, then Private repositories are free. Otherwiwse, you’d need a paid GitHub subscription.

Click on the checkbox to enable “Initialize this repository with a README”.

- Each repository is required to have a readme file, which you may want to comment but is not strictly necessary. Commenting uses Markdown, which is essentially the same as RMarkdown!

Using a Remote Repository with GitHub

Once you’ve created an online repository, Projects once again allows you to easily connect RStudio with the repository.

To setup a project for use with GitHub, create a New Project and select Version Control instead of New Directory.

From there, simply choose “Git” and then copy the url of the repository from GitHub into RStudio.

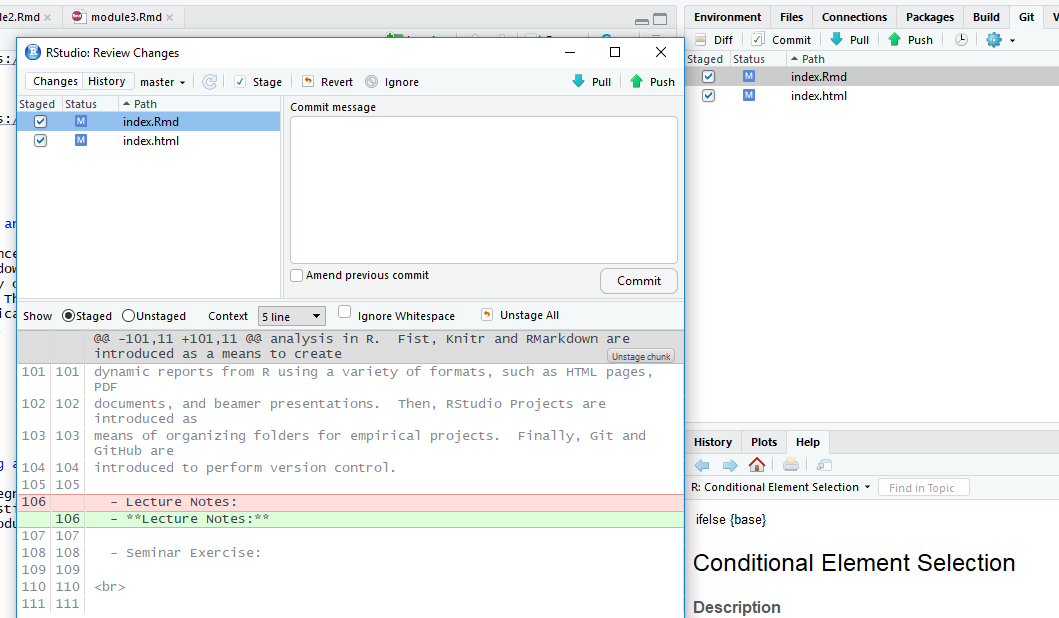

Tracking changes with Git

Once you have a Project setup with version control, the first key component of tracking changes is “Committing” them to the repository

- A “commit” is an update that saves revisions of files into the Git repository.

You can commit changes by going to the “Git” tab in the upper right-hand side of the RStudio IDE.

In the Git tab, any files that have changed since the last commit are listed. From there, click on the files you’d like to commit and click on the commit button.

A Commit box appears which shows you the changes since the last revision and asks for a commit message, where you should very briefly describe the changes.

Syncing changes with a remote repository

If you are just tracking changes with a local repository, commit is sufficient to manage version control.

But if you are using version control with an remote (ie online) repository, you will two other steps to make sure changes are sync between the local repository and online.

To send changes made locally to the online repository, after comitting changes click on “Push.”

To sync changes from the online repository to local files, click on “Pull”.

Viewing previous commits

To view previous versions of the files (along with annotations supplied with the commit message), click on the clock icon in the Git pane.

From there, you can see not only a “difference” view of the file changes, but you can also open the document exactly how it was written in a previous commit.

From there, if you wanted to revert changes, you could explicitly revert the file with Git, or simply copy over the file with code from the previous commit — my preferred method of reverting changes.

Regression analysis and data visualization

Regression Basics

Linear Regression

The basic method of performing a linear regression in R is to the use the lm() function.

- To see the parameter estimates alone, you can just call the

lm()function. But much more results are available if you save the results to a regression output object, which can then be accessed using the summary() function.

Syntax:

myregobject <- lm(y ~ x1 + x2 + x3 + x4,

data = mydataset)CEX linear regression example

lm(expenditures ~ educ_ref, data=cex_data)##

## Call:

## lm(formula = expenditures ~ educ_ref, data = cex_data)

##

## Coefficients:

## (Intercept) educ_ref

## -641.1 109.3cex_linreg <- lm(expenditures ~ educ_ref,

data=cex_data)

summary(cex_linreg)##

## Call:

## lm(formula = expenditures ~ educ_ref, data = cex_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -541109 -899 -690 -506 965001

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -641.062 97.866 -6.55 5.75e-11 ***

## educ_ref 109.350 7.137 15.32 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 7024 on 305970 degrees of freedom

## (75769 observations deleted due to missingness)

## Multiple R-squared: 0.0007666, Adjusted R-squared: 0.0007634

## F-statistic: 234.7 on 1 and 305970 DF, p-value: < 2.2e-16Formatting regression output: tidyr

With the tidy() function from the broom package, you can easily create standard regression output tables.

library(broom)

tidy(cex_linreg)## # A tibble: 2 x 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) -641. 97.9 -6.55 5.75e-11

## 2 educ_ref 109. 7.14 15.3 5.75e-53Formatting regression output: stargazer

Another really good option for creating compelling regression and summary output tables is the stargazer package.

- If you write your reports in LaTex, it’s especially useful.

# From console: install.packages("stargazer")

library(stargazer)

stargazer(cex_linreg, header=FALSE, type='html')| Dependent variable: | |

| expenditures | |

| educ_ref | 109.350*** |

| (7.137) | |

| Constant | -641.062*** |

| (97.866) | |

| Observations | 305,972 |

| R2 | 0.001 |

| Adjusted R2 | 0.001 |

| Residual Std. Error | 7,024.151 (df = 305970) |

| F Statistic | 234.746*** (df = 1; 305970) |

| Note: | p<0.1; p<0.05; p<0.01 |

Interactions and indicator variables

Including interaction terms and indicator variables in R is very easy.

Including any variables coded as factors (ie categorical variables) will automatically include indicators for each value of the factor.

To specify interaction terms, just specify

varX1*varX2.To specify higher order terms, write it mathematically inside of I().

Example:

wages_reg <- lm(wage ~ schooling + sex +

schooling*sex + I(exper^2), data=wages)

tidy(wages_reg)## # A tibble: 5 x 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) -2.05 0.611 -3.36 7.88e- 4

## 2 schooling 0.567 0.0501 11.3 3.30e-29

## 3 sexmale -0.326 0.779 -0.418 6.76e- 1

## 4 I(exper^2) 0.00752 0.00144 5.21 2.03e- 7

## 5 schooling:sexmale 0.143 0.0660 2.17 3.01e- 2Setting reference groups for factors

By default, when including factors in R regression, the first level of the factor is treated as the omitted reference group.

- An easy way to instead specify the omitted reference group is to use the relevel() function.

Example:

wages$sex <- wages$sex %>% relevel(ref="male")

wagereg2 <- lm(wage ~ sex, data=wages); tidy(wagereg2)## # A tibble: 2 x 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 6.31 0.0775 81.5 0.

## 2 sexfemale -1.17 0.112 -10.4 6.71e-25Useful output from regression

A couple of useful data elements that are created with a regression output object are fitted values and residuals. You can easily access them as follows:

- Residuals: Use the residuals() function.

myresiduals <- residuals(myreg)- Predicted values: Use the fitted() function.

myfittedvalues <- fitted(myreg)

Model Testing